We really appreciate the patience everyone has shown while waiting for our headsets to ship. We know it's been a long wait, and too much time has passed in between now and our last update.

We've recently been thinking about the approach we've taken to this project. It's basically been something like this:

- Work on stuff.

- Tell everyone what we've been working on. Maybe show some pictures and stuff.

- …but don't release anything until everything is finished.

We're starting to think that a better approach is something like this:

- Tell people what the next milestone is.

- Work on stuff.

- Talk about progress (with emphasis on the milestone).

- Release an intermediate product.

- Repeat until the full product is finished.

We think this approach will focus our efforts and give us a way to add value to people more quickly than simply keeping everything holed up before having one big giant release.

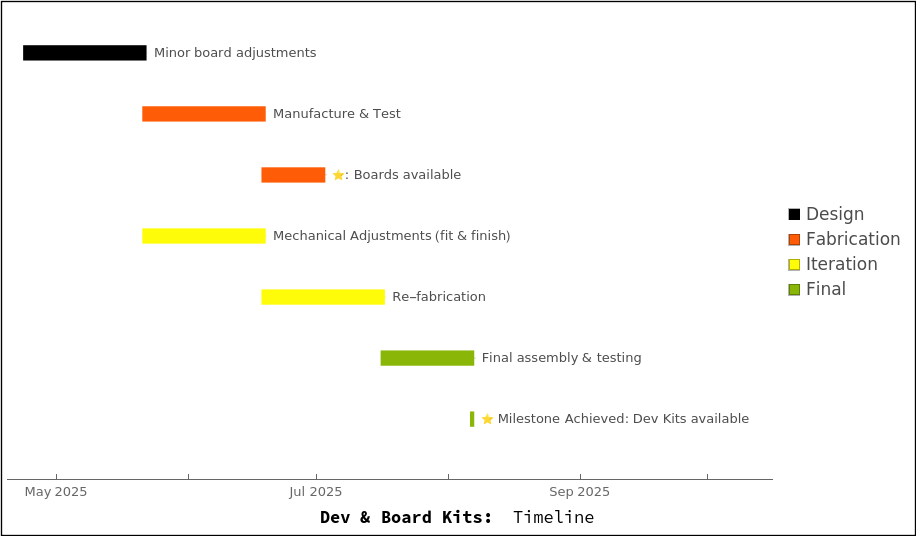

1 Intermediate Product #1: VR Board Kits (2 months) & Dev Kits (1.5 months)

By "Dev Kits" we mean stripped down variants of the VR headsets that we've been working on. "Board Kits" are just the same thing, but without our chassis supplied. These are meant for researchers, open-source VR enthusiasts, and anyone who might be interested in contributing to/forking our development. We don't expect to sell too many of these (since they're not feature complete headsets), but they provide us with an opportunity to push an intermediate product out more quickly to interested parties.

Our first iteration Dev Kits will include the raw basics:

- 2 x VR Displays

- 2 x Sets of (3-element non-fresnel) VR lenses with independent manual IPD adjustment

- Basic boards (1 x Connector Board, 2 x Display Interposers, 1 x VXR Board) to route images from the host to the VR Displays

- Our updated chassis + headset exterior to house everything (with an open front enclosure until our front-facing sensor stuff is finished).

From there, we will layer on everything else as we finish them (releasing the intermediate parts along the way) until our full headset is finished. A rough overview of what these Dev Kits will and will not contain is as follows:

| Icon | Item | Included in v1 Dev Kit? | Notes |

|---|---|---|---|

| 2 x VR Displays (2448x2448) | X | Done | |

| 2 x Display Interposer Boards | X | Requires slight adjustments to mounting. | |

| 1 x VXR Board (splits DisplayPort into MIPI-DSI) | X | Requires slight adjustments to power supplies to fit new chassis (see below) | |

| 1 x Connector Board | X | Requires slight adjustments to mounting. | |

| 2 x Lenses (3-element non-fresnel) | X | Done | |

| 1 x Chassis | X | Requires alterations for manual IPD adjustment mechanism (see sections below) | |

| 2 x Independent IPD Manual Adjustment Knobs | X | Dev-Kit-only solution en route to our automatic IPD adjustment | |

| 1 x Foam Facial Interface | X | Done | |

| 2 x Lens Holders | X | Done | |

| 1 x Headset Strap | X | Done/off the shelf item | |

| 1 x Headset Exterior | X | Included in v1 iteration, except for front enclosure | |

| 1 x (20 x 20-Pin Micro-Coax Cable) | X | Connects VXR Board to Connector Board | |

| 2 x (40 x 30-Pin Micro-Coax Cables) | X | Connects each Display Board to the VXR Board | |

| 50 x CABLINE CA II Connectors | X | Used for various PCBs | |

| 1 x USB-A to USB-C cables | X | Required to power the displays | |

| 1 x HDMI to USB-C | X | To connect displays to Compute Pack Substitute (NUC, etc) | |

| 2 x Camera Boards | To be released in v2 iteration | ||

| 1 x FPGA Board | To be released in v2 iteration | ||

| 1 x FPGA Adapter Board | To be released in v2 iteration | ||

| 2 x Eye Tracking Sensors + Lenses | To be released in v3 iteration | ||

| 2 x Front-Facing Camera Lenses | To be released in v2 iteration | ||

| 2 x Tracking Lenses | To be released in v2 iteration | ||

| 2 x IPD Motors | To be released in v3 iteration | ||

| 1 x Compute Enclosure | To be released as separate standalone Compute Pack item (see next section) | ||

| 1 x Compute Board | To be released as separate standalone Compute Pack item (see next section) | ||

| 1 x Compute Pack | To be released as separate standalone Compute Pack item (see next section) | ||

| 1 x Battery | To be released as separate standalone Compute Pack item (see next section) | ||

| 1 x Heat Management System (fan, heat sink, etc) | To be released as separate standalone Compute Pack item (see next section) |

For tracking, our Dev Kit will unofficially support the support the Xvisio XR50 through a monado driver we implemented earlier last year for testing purposes; our final headset will is using our own tracking solution though (see 6dof tracking section below).

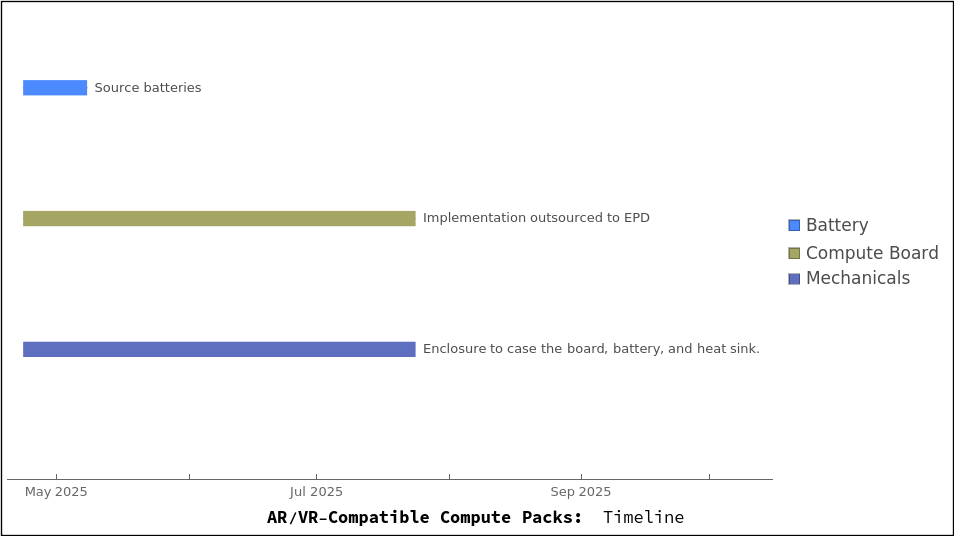

2 Intermediate Product #2: Compute Packs (for AR Headsets, Our VR Headset, etc) (3 months)

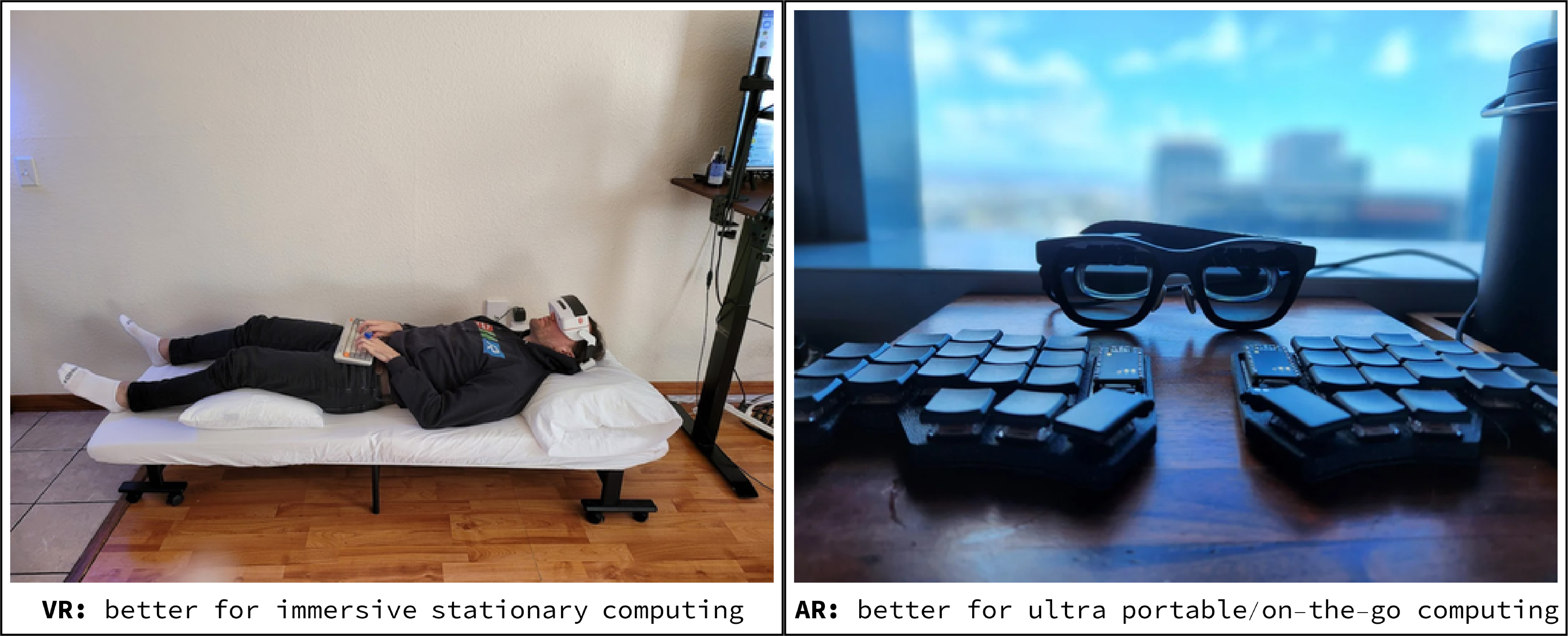

Some of our backers have expressed interest in the recent wave of AR headsets released over the past year. These AR headsets basically trade off lower FOV (~50°, which is about half of VR headsets) for better ergonomics and even slightly higher PPD (~45PPD per headset):

Though we think VR headsets are ultimately better for stationary setups to achieve maximum immersion, the AR form factor could be better for super-portable, on-the-go computing (e.g. plane trips, train rides, walking around outside, etc).

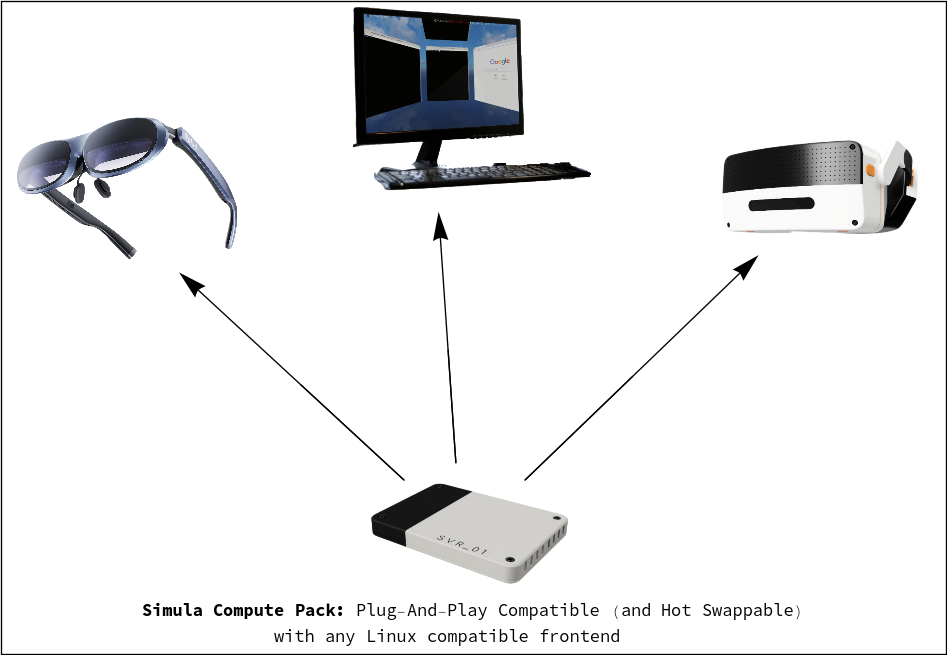

Towards this end, we want to early release our Simula One compute pack (which we're working on anyway) as an intermediate product, which will be Simula-compatible with certain Linux-friendly AR headsets (alongside our own VR headset):

Releasing this allows us to get something useful (which we're working on anyway) to people sooner. As a demonstration, here's Simula running on a Rokid Max using a reverse-engineered driver from Monado as a reference case:*

Other headsets we are interested in potentially targeting include the Rokid Max 2 and Xreal One. With that said, since these headsets don't offer any Linux support, we are (unfortunately) required to reverse engineer a driver for each of them to get them working with Simula. This involves figuring out how each headset expects to communicate with the host to do things like power on their displays, send parsable tracking data every frame, and so forth. Though these drivers can be time-consuming to implement, we think it might be worth it to add support to a couple of the most popular AR headsets to make our compute packs more valuable to people.

If everything goes according to plan, we expect this milestone product to be released in roughly ~3 months. See section below for a more fine-grained time estimate.

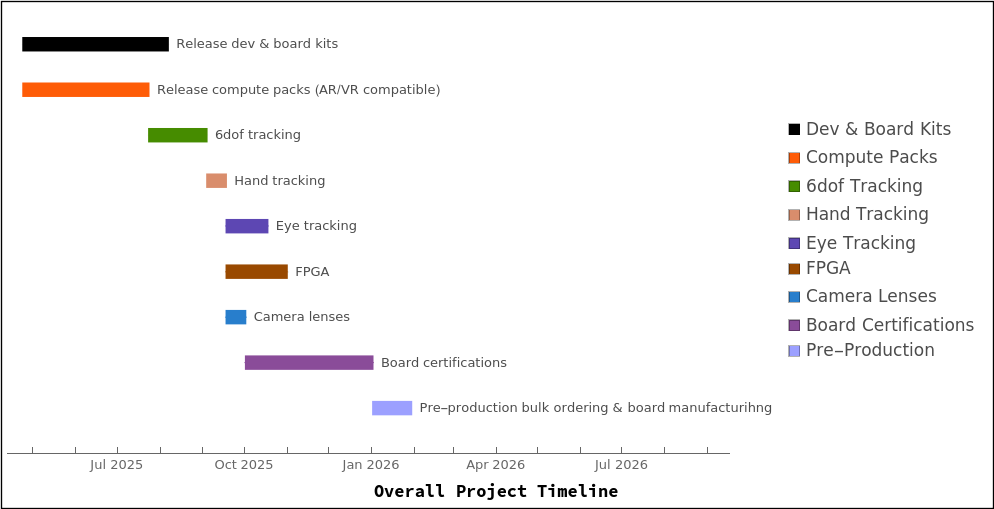

3 Remaining timeline for the rest of our headset

Below are an overview of the things we still have to do to finish our VR headsets. We provided rough time estimates of everything so people know what's going on.

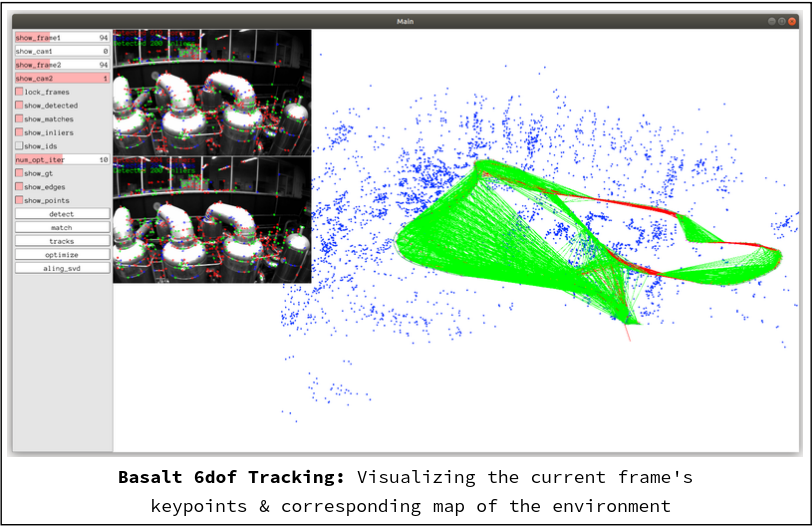

3.1 6dof Tracking (6 weeks for something shippable, with additional software updates incoming)

We're working with the Basalt team to implement our headset's 6dof tracking (using a fork which works with monado). Basalt is a FOSS Visual-Inertial Tracking (VIO) system that fuses camera feeds with IMU data to provide sub-millimeter accurate 6dof pose estimations:

Basalt's tracking operates on two levels which work in tandem with each other: (i) a "local layer" which handles rapid pose corrections (using only the most recent frames to correct for sensor drifting), and (ii) a "global layer" which stores and updates a sparse set of keypoints (visually distinctive features of the environment like e.g. the corners of objects) for a longer-term positional reference.

While Basalt provides a good basis for a 6dof tracking system, it's not state of the art, and is in need of SLAM modernizations to be competitive with modern headsets (including e.g. longer-term map storage which persists for weeks instead of minutes). These updates can be pushed from the cloud though/aren't strictly required for us to ship.

On our end, we still need to finish and mount our Tracking Camera Boards (with Sony IMX900 image sensors + an IMU for orientation data). For this we estimate ~1 week of design work and 4 weeks of bring-up. After this, getting a basic version of Basalt up and running (with all of the sensor calibrations, etc) should take us another ~1 week.

![]()

3.2 Hand-Tracking (2 weeks for hardware validation, with software updates incoming)

We've teamed up with the lead developer of Project Babble (an open-source mouth tracking project) to help improve monado's hand-tracking system (known currently as "mercury").

![]()

Mercury's current system works as follows:

- Takes stereo camera pictures as inputs for each frame.

- Uses a CNN to generate hand keypoints & joints on those frames.

- Uses the historical poses from (2) to slightly alter and adjust those keypoints/joints (using e.g. the "Levenberg-Marquardt kinematic optimizer" algorithm).

- Final output: A 3D hand with pose information (joint positions, orientations, etc), which is jammed into a historical log for future iterations of (3).

In theory, the only thing we need to do to get this older system working with our headsets is to calibrate/sync up our tracking cameras to it (~1-2 weeks), so it's not super bottlenecking.

![]()

The model adjustments we want to make (not strictly required for shipping though):

- Use a lighter-weight vision transformer instead of a CNN for step (2).

- Improve the tracking FOV: Basically, the current system requires hands to be visible to both tracking cameras in order for it to be able to infer depth positions of the hand points. Our planned improvements will allow for depth estimations even when a hand is visible to only a single camera, which effectively increases the tracking volume beyond the stereo overlap region of the tracking cameras.

Hardware-wise, we're reusing the same cameras that feed our 6dof pipeline (see above). In terms of model training, we're using 125M labeled frames supplied from a Project Aria dataset. We're also supplementing this with synthetic hand data generated using DART.

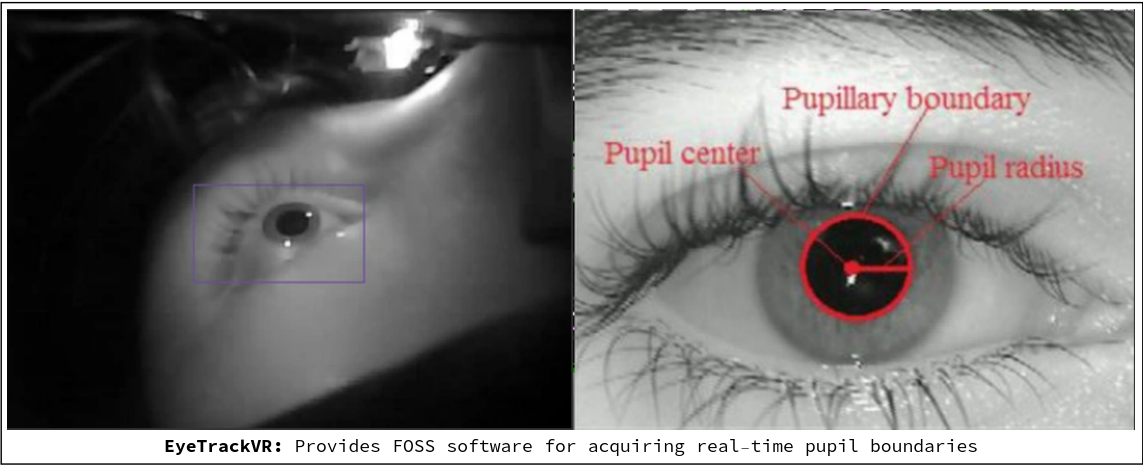

3.3 Auto IPD & Eye Tracking (1 month of hardware validation; 3 months of motor work)

We've joined forces with the devs at EyeTrackVR to help finish our eye-tracking system. Basically, they're helping us implement a monado interface for IPD data while we finish our Eye Tracking Boards:

Our Eye Tracking Boards will illuminate each eye with a small amount of infrared light, enabling a camera feed to get relatively high-contrast images for each eye. EyeTrackVR's open-source software then determines pupil boundaries to provide us with IPD info through monado.

![]()

Regarding our IPD motor system, we unfortunately lost the vendor source for our piezo motors we had already designed around :( This means we have to readjust our design around a non-piezo motor. We're expecting to be able to accommodate these adjustments in about 3 months (which we're working on now, ahead of the eye tracking electronics).

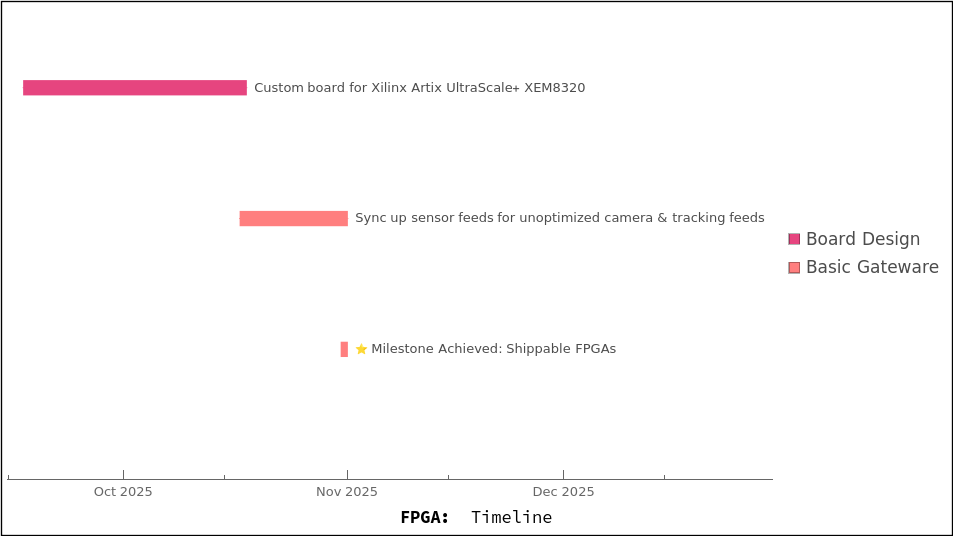

3.4 FPGA Board & Gateware (2 months for something shippable)

Making our FPGA Board and its gateware is a larger item which can be divided into the following components:

- Making of the actual board (1 month remaining): This involves making a custom board for our Xilinx Artix UltraScale+ XEM8320.

- Achieving "Basic Functionality" in Gateware (2 weeks): This involves syncing up camera and sensor feeds into Simula for a basic/unoptimized AR Mode and tracking capabilities.

- Fully Optimized Functionality in Gateware (~2 months): Fully optimized gateware involves offloading all or most of our tracking load (or whatever is feasible) onto the FPGA. Additionally, it involves a fully optimized image processing pipeline for AR Mode (including things like auto white balance, color correction, gamma correction, etc). It could also possibly involve offloading some of our eye-tracking load from the host to the FPGA (though early indications suggest that using the host computer could be more optimal). Strictly speaking, we don't need fully optimized FPGA gateware functionality to ship our headsets as fast as possible (since we can push those optimizations via a software updates anyway up on GitHub).

Since this is a larger item on our list, we're looking to parralelize it if at all possible (not reflected in our current time estimate).

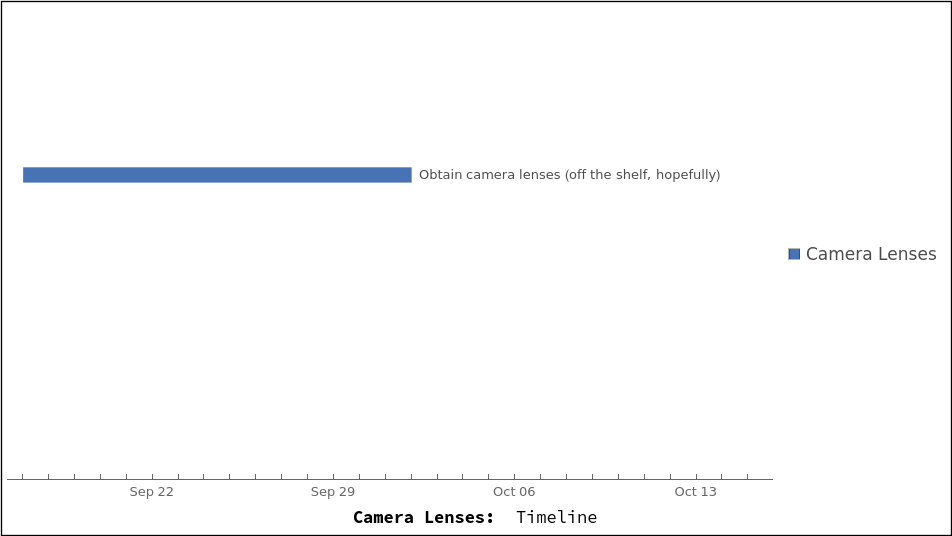

3.5 Fixing Camera Lenses (2 weeks)

We still need to obtain the right camera lenses which reasonably optimize our AR Mode's depth, FOV, and other optical qualities. The main problem we're facing: the lenses we currently have mounted to our image boards are too long, making them hard to fit them into our new (smaller) headset enclosure.

Though lenses are off the shelf products which are generally pretty available, there's still a chance we might need a custom solution to make everything fit properly. If we don't need to do this, we're looking at not much work (mostly trying off the shelf solutions and seeing if we can accomodate our exterior around them). If we do, it could take up to 2 months of time.

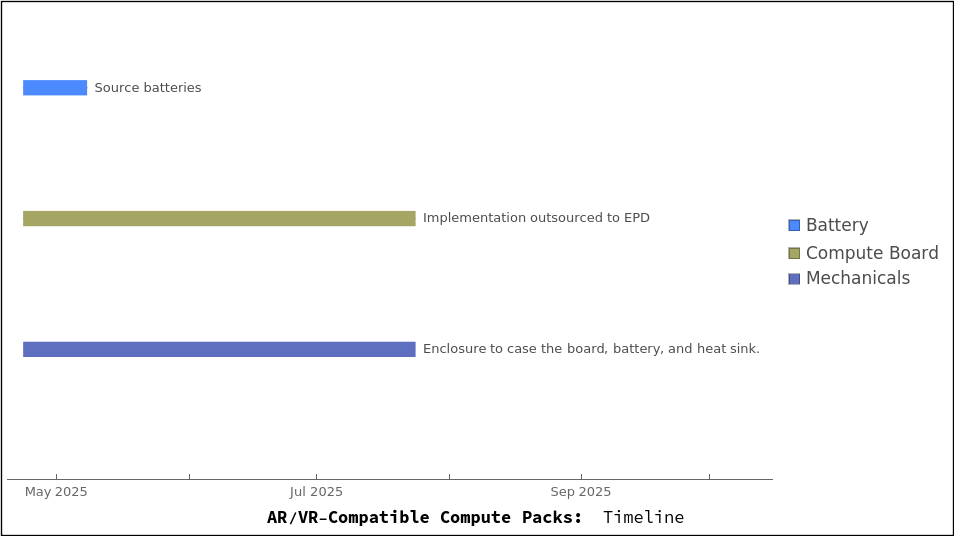

3.6 Compute Pack: Compute Board, Heat Sink, Battery & Enclosure (~3 months in tandem)

This section outlines the details associated with our Compute Packs.

3.6.1 AMD Ryzen 8000 Compute Board (~3 months)

We're now working with EEPD to modify their NUCU design SBC into our compute pack. We switched from the Intel NUC line to AMD to take advantage of the better graphics performance (and also due to Intel's discontinuance of the NUC line). The updated specs of our compute (compared to the older Intel 13th Gen NUC) are as follows:

| Intel NUC (Raptor Lake 13th Gen) | NUCU (AMD Ryzen™ Embedded 8840U) | |

|---|---|---|

| Manufacturer | Intel | AMD |

| Platform | Linux | Linux |

| CPU | 13th Gen Intel Core i7-1365u | Ryzen 7 8840U |

| Cores | 10 (2 "Performance Cores" + 8 "Efficiency Cores") | 8 |

| Threads | 12 | 16 |

| TDP | 12W ~ 28W | 15W - 30W |

| CPU Frequency | P-Cores: 1.8GHz ~ 5.2GHz Turbo; E-Cores: 1.3GHz ~ 3.9GHz Turbo | 3.3GHz - 5.1GHz |

| Memory Capacity | 16 GB LPDDR5 | ? |

| 32 GB LPDDR5 (Founders Edition & Upgrades) | ? | |

| Type of RAM | LPDDR5 | DDR5 |

| RAM Frequency | 6000 MT/s | 5600 MT/s |

| RAM Transfer Speed | 96 GB/s | 87.5 GB/s |

| GPU | Iris Xe graphics | AMD RDNA3 Radeon Graphics |

The main trade-offs involved in switching to AMD include a slightly higher power envelope (15-30W vs 12-28W) and slightly lower memory transfer speeds (87.5 vs 96 GB/s), but with the benefit superior integrated graphics with RDNA3. This is going to improve the performance of our compositor quite a bit from our original Intel 11th Gen NUCs.

3.6.2 Compute Pack Enclosure & Strap (~3 months in tandem with Compute Board development)

Compute Pack Enclosure: **The Compute Pack Enclosure must accommodate the Compute Board, its heat sink, a battery, and do all of this while channeling heat away from the user's head. We have some teaser shots of this item but would like to make it into a more dedicated post soon.

: The plan here is basically: find an OEM which makes straps for other headsets, and then get them to make a strap for our headset. This item is dependent upon us locking in the attachment mechanism on the Compute Pack Design (which we should get fairly early). Note that here if we are absolutely backed into a corner, we can just make our compute packs non-mountable to the strap while using off-the-shelf straps, which are pretty easily available (but we really don't want to do this!).

3.6.3 Battery (2 weeks, in tandem)

We've been on the lookout for modern lithium-ion rechargeable cells, but so far haven't come across anything. Our fallback solution is to just use two 18650 cells, which would at least be readily available off-the-shelf while providing enough power for our 15W-30W TDP requirements. The downside though is that this will make compute packs slightly more bulky than necessary, while also costing users ~20% on battery life (holding weight constant) of what we could otherwise achieve. Since speed is of the essence, we're pretty likely going to end up taking the quicker solution.

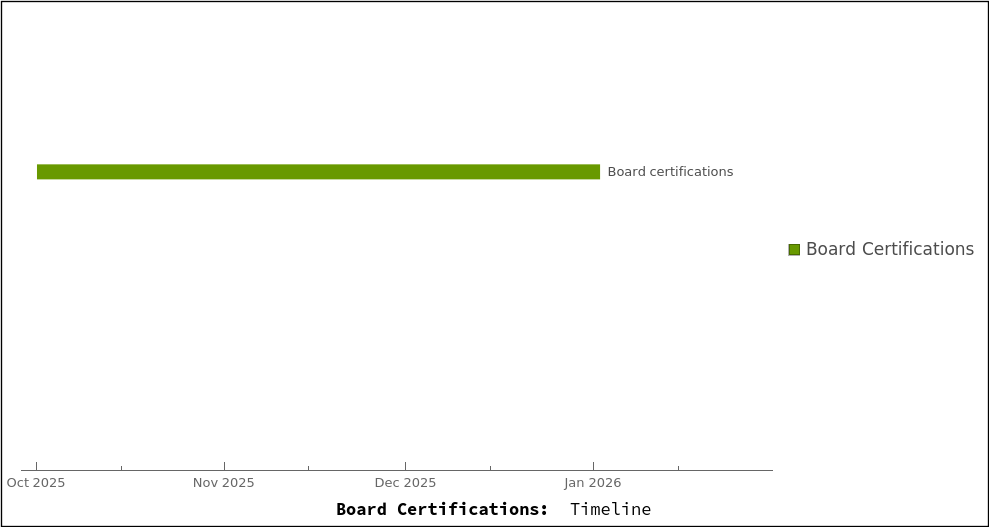

3.7 Board Certifications (3 Months)

Though we are technically selling "dev kits" and so are granted some leeway, we are still required by U.S. & EU law to comply with the following compliance items before shipping our headsets. In a lot of cases, we will be able to precertify our boards before they are assembled into our final product (allowing us to certify without bottlenecking device shipping). Overall though, we expect this to add something like ~3 months to our shipping time, which is unfortunate.

| Compliance Item | Description | Affected Board(s) | Certification Method | Jurisdiction | Pre-Certification Available? |

|---|---|---|---|---|---|

| FCC Part 15 (for unintentional + intentional radiators) | Ensures device doesn't emit harmful EMI and operates within regulated frequency bands | FPGA Board, VXR Board, Camera Boards, Eye Tracking Board, Tracking Camera Board, Compute Board, Connector Board | External testing at FCC-accredited lab | Partial | |

| (GPSR) | Verifies electrical safety for specified voltage ranges | FPGA Board, VXR Board, Eye Tracking Board, Tracking Camera Board, Compute Board, Connector Board | Self-certification with DoC | Yes | |

| Electromagnetic Directive (EMC Directive) | Ensures electromagnetic compatibility with other nearby devices | FPGA Board, VXR Board, Camera Boards, Eye Tracking Board, Tracking Camera Board, Compute Board,Connector Board | Self-certification with DoC | Partial | |

| Radio Equipment Directive (RED) | Ensures proper use of radio spectrum for wireless devices | FPGA Board, Connector Board | External testing by notified body | Yes | |

| Restriction of Hazardous Substances (RoHS) | Verifies absence of hazardous substances | All boards | Self-certification with material declarations | Yes | |

| UN 38.3 | Ensures lithium-ion batteries are safe for transportation | Compute Board Battery | External testing at certified lab | International | Yes |

| IEC 62133 | Evaluates battery safety under normal or abusive conditions; not required if we use off-the-shelf batteries. | Compute Board Battery | External testing at certified lab | International | Yes |

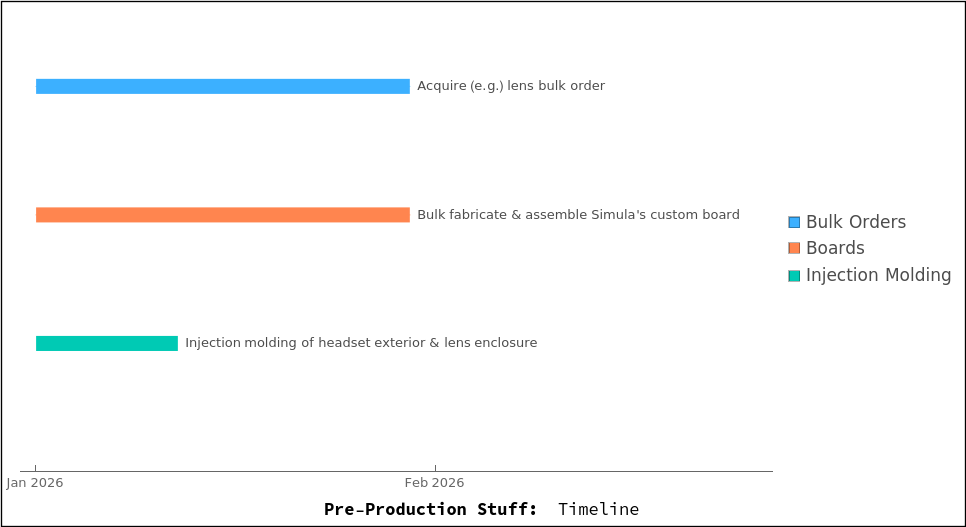

3.8 Pre-Production Bulk Ordering & Board Manufacturing (1-2 months)

The following will be ran in parallel towards the end of the project:

-

Acquire Lens Bulk Order (~4 weeks): Right now we have remaining 20 samples of our custom 3-piece lenses (enough to make ~10 additional headset units). It will take about ~4 weeks of lead time to receive our bigger 1K lens set bulk order (enough for 500 headsets).

-

Fabricate & Assemble Simula's Custom Boards (4 weeks): This includes the Display Boards, VXR Board, Connector Board, FPGA Board, etc. We have a small number of samples of these boards for Dev Kits, but for the final batch we'll need to assemble more of them.

-

Injection Molding of our Headset Exterior & Lens Enclosure (1-2 months): For Dev Kits we've been using a combo of FDM, SLS & resin printing, but for our final release we want to usual actual injection molds.

3.7 Putting it all together